I was thinking of the fate of the atlas and the roadmap for my “Days of Future Past” series. I was thinking back to my time as a teenager in New Delhi when I used this paper roadmap of New Delhi’s complex road network. I used that roadmap to learn the routes through the city roads by heart! I would help my parents navigate our weekend outings into the city. This was nearly 20 years ago, and I was remarking at how I never use a paper map anymore 🤯

So, I thought about exploring “roadmaps” as a concept with Midjourney. It did not quite go as planned. I think I broke it! Here is a list of prompts that I tried, to see MJ hallucinating (and to see Bing working):

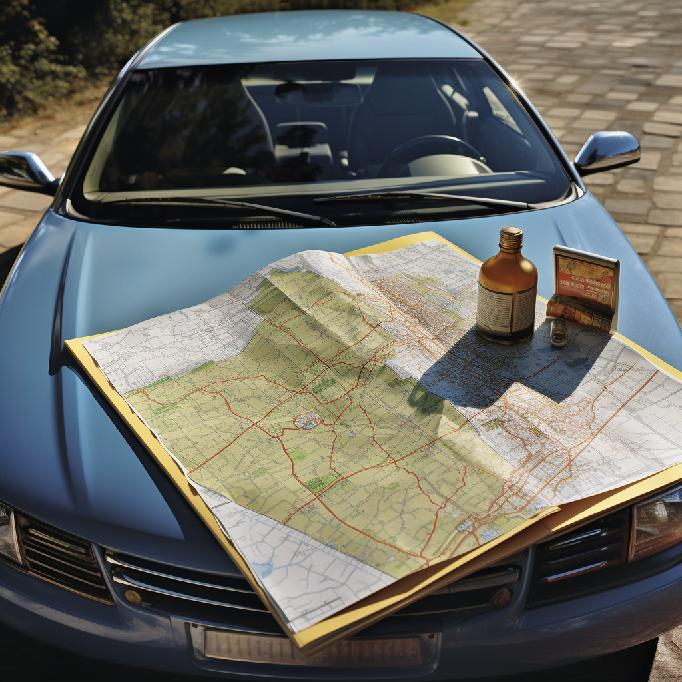

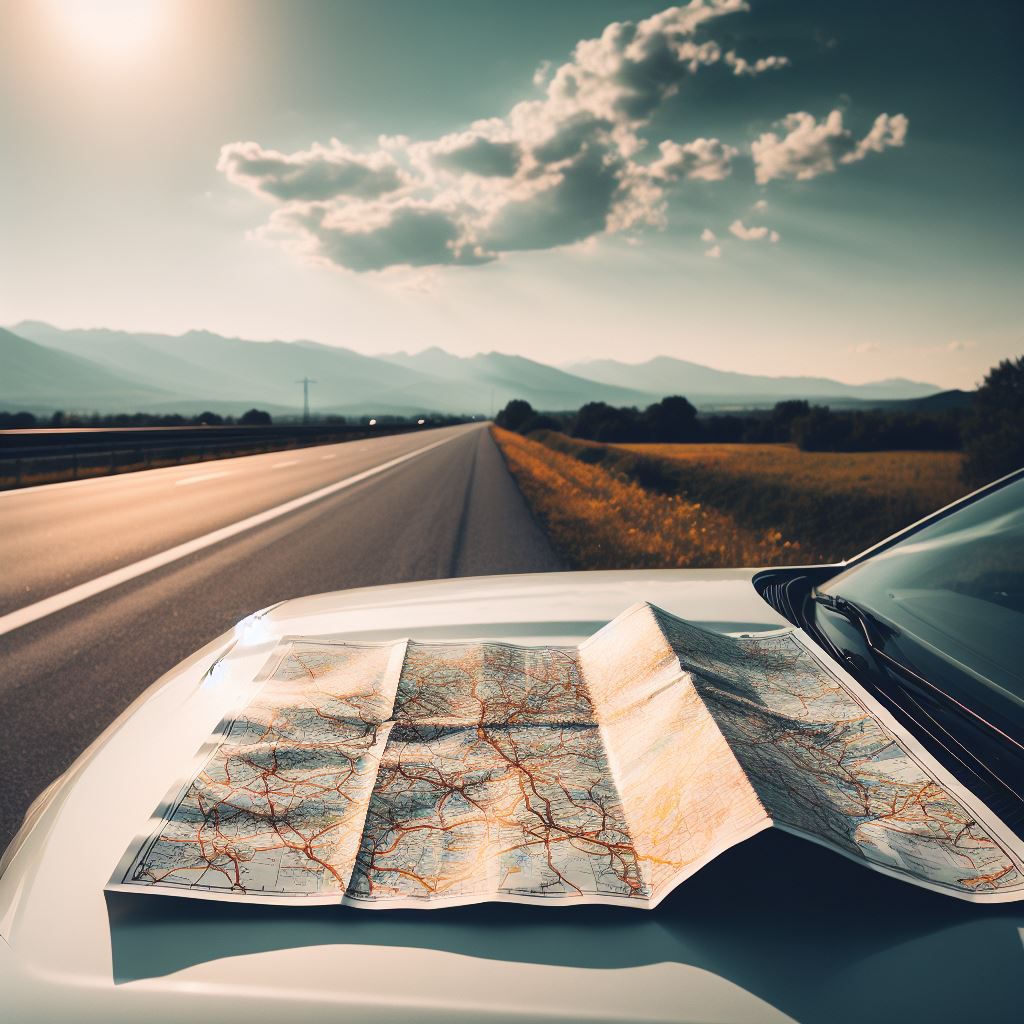

- Prompt#1: “a road map, spread out on the hood of a car; the car is parked on the side of a highway, on a clear, bright sunny day.”

- Variation on Image#2 from Prompt#1

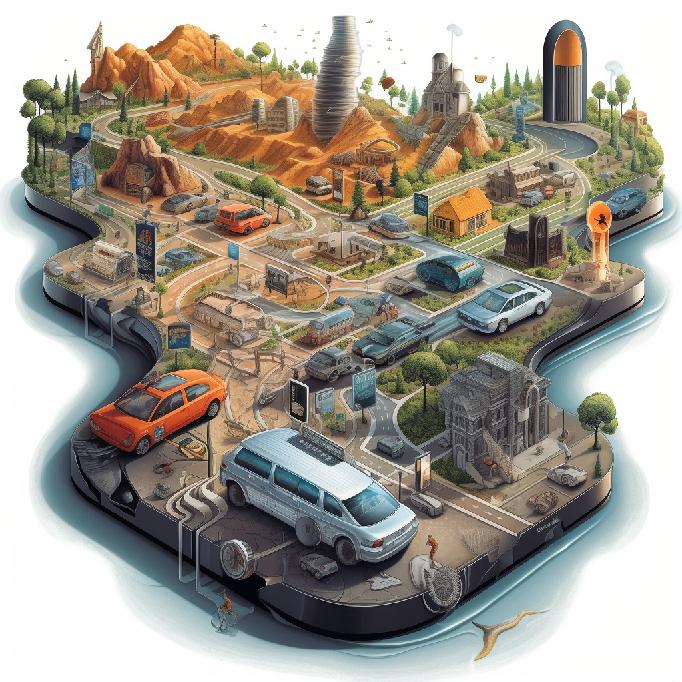

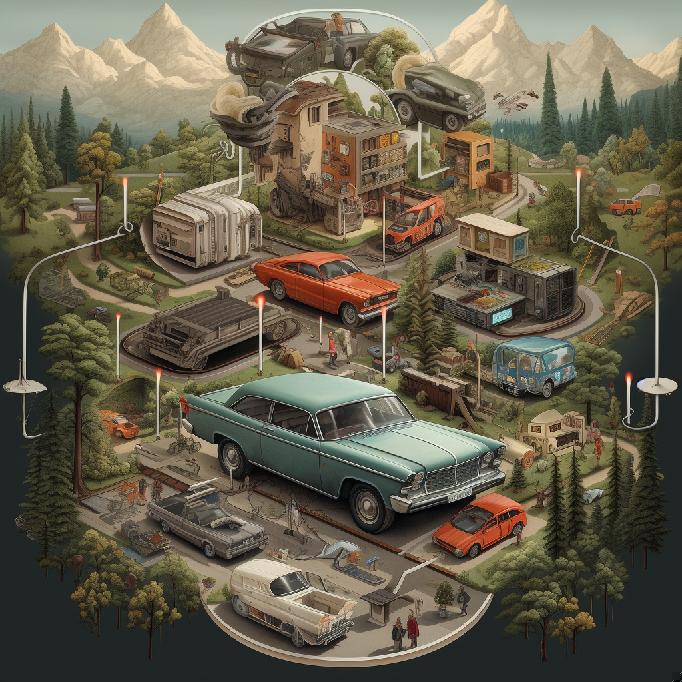

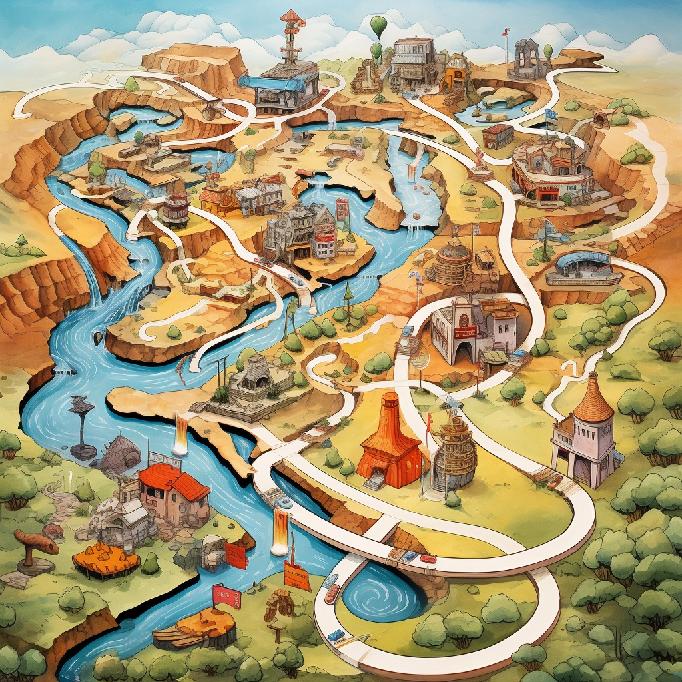

- Prompt#2: “a roadmap on the hood of a car”

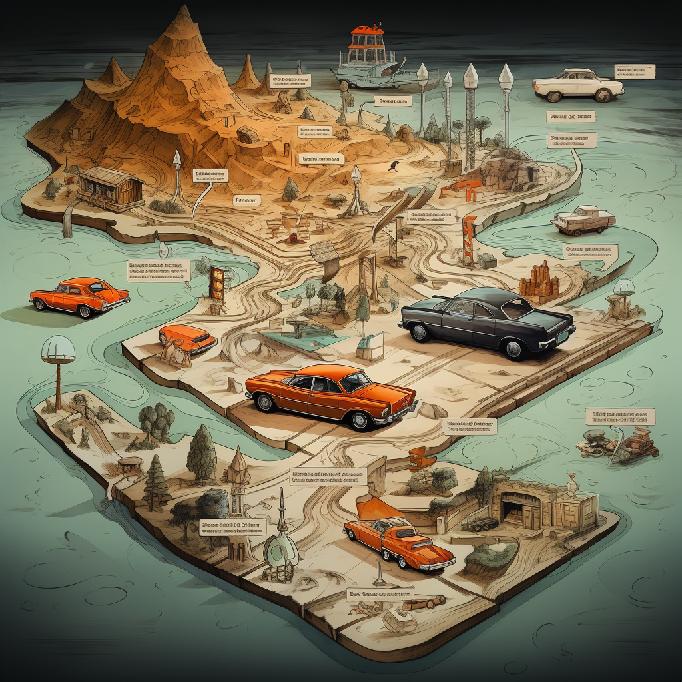

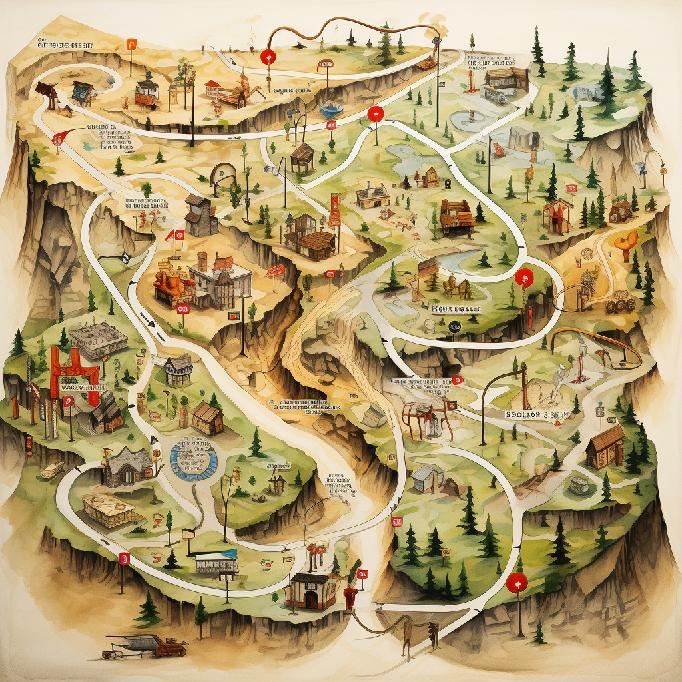

- Prompt#3: “a road map on the hood of a car”

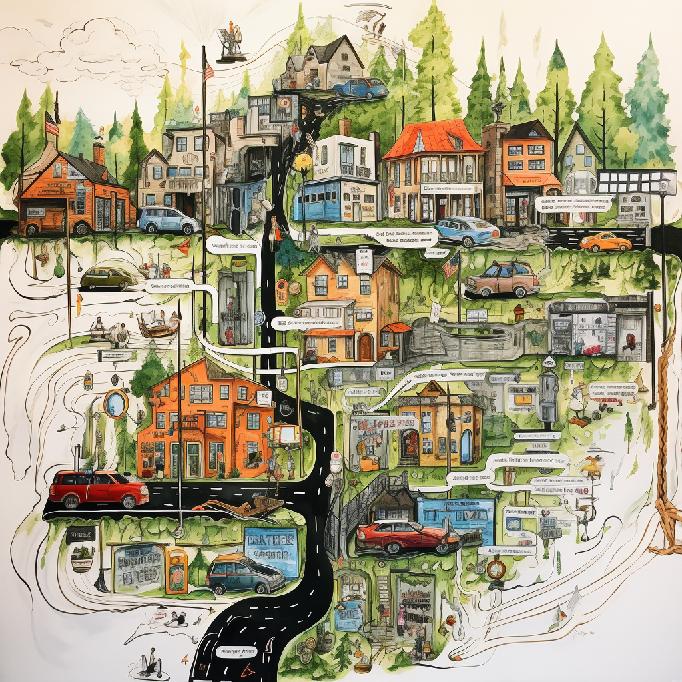

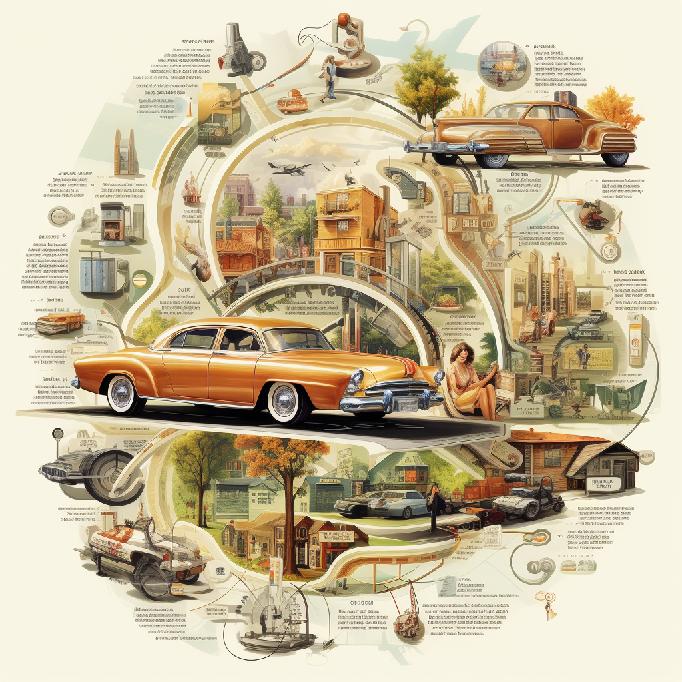

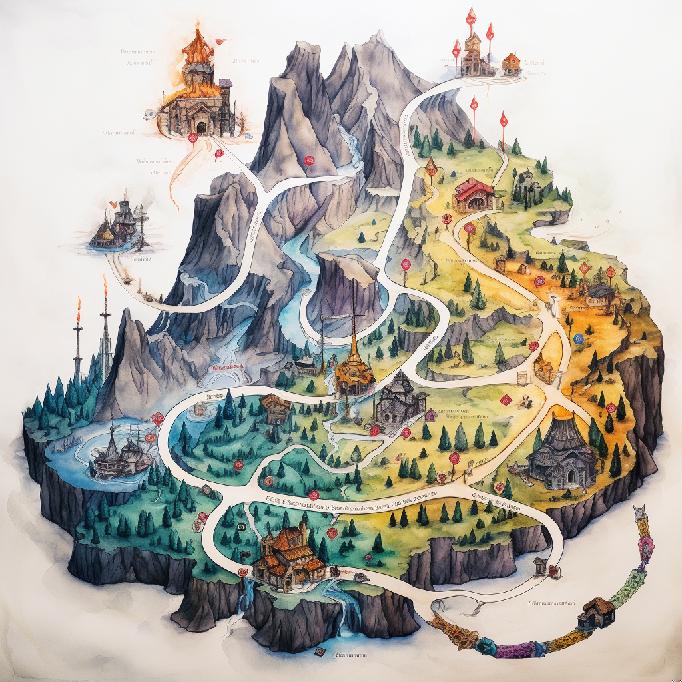

- Prompt#4: “a road map”

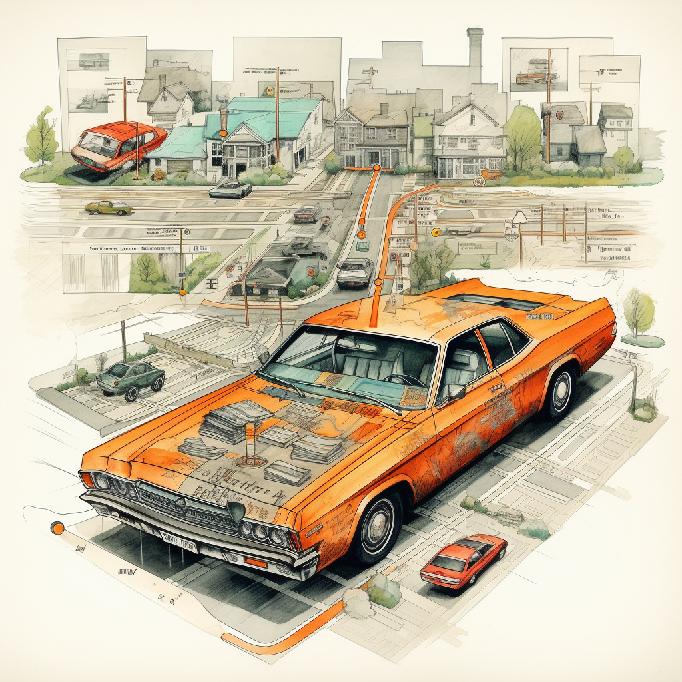

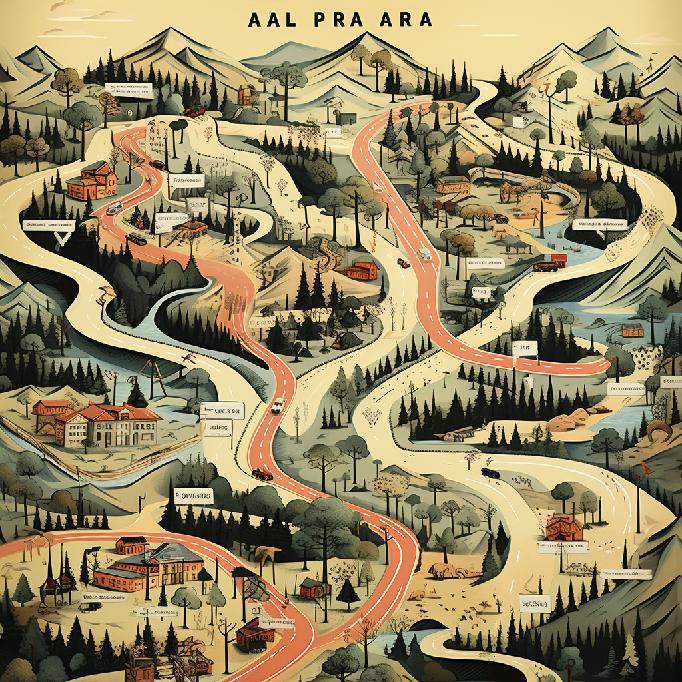

- Prompt#5: “a roadmap”

- Bing: “Create an image of a road map, spread out on the hood of a car; the car is parked on the side of a highway, on a clear, bright sunny day.”

- Endnote

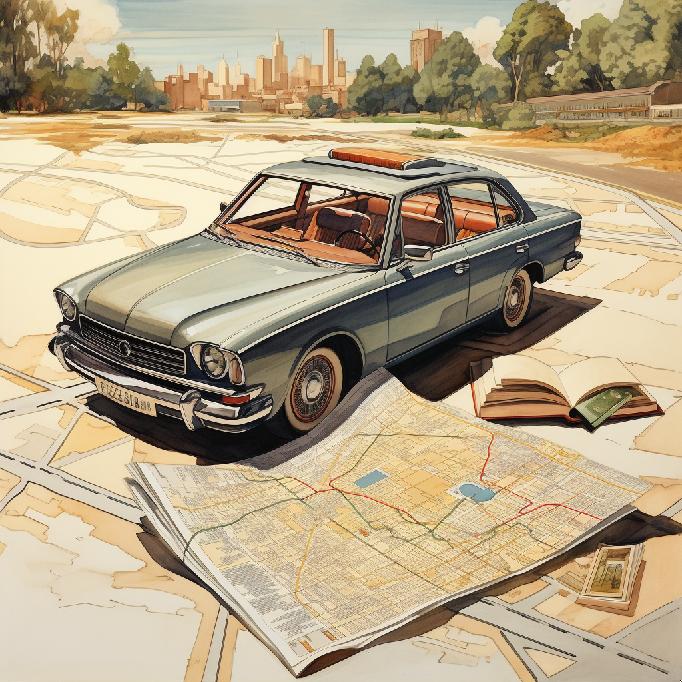

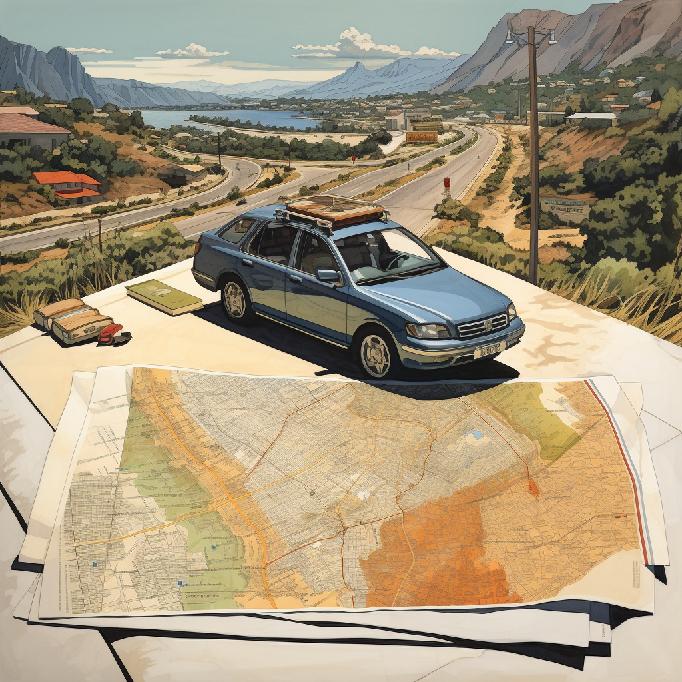

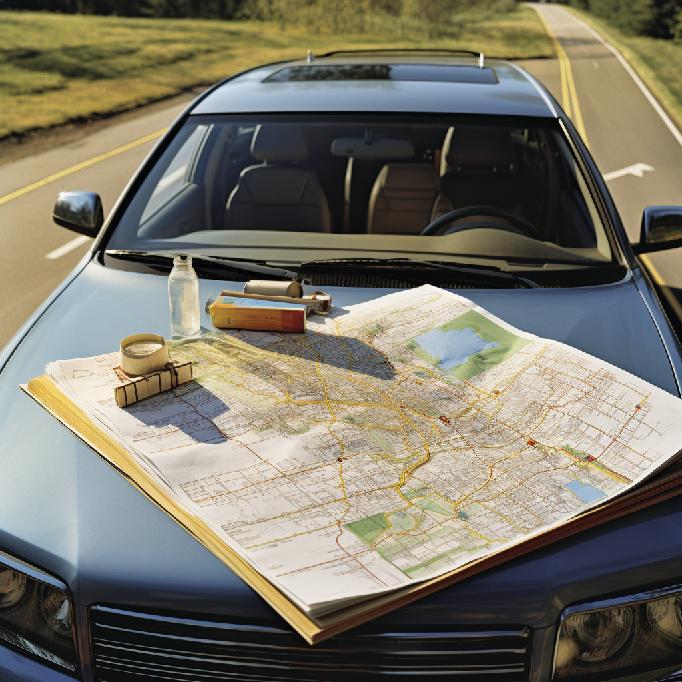

Prompt#1: “a road map, spread out on the hood of a car; the car is parked on the side of a highway, on a clear, bright sunny day.”

Yes, I ran it four time. Each run gave me 4 images, and so 16 images in all! Only the second image in the first set was relatively close to what I was looking for. In all other cases, the map is on the road, or worse the car seems to be a toy car, on a table, that also contains a road map. The model is hallucinating.

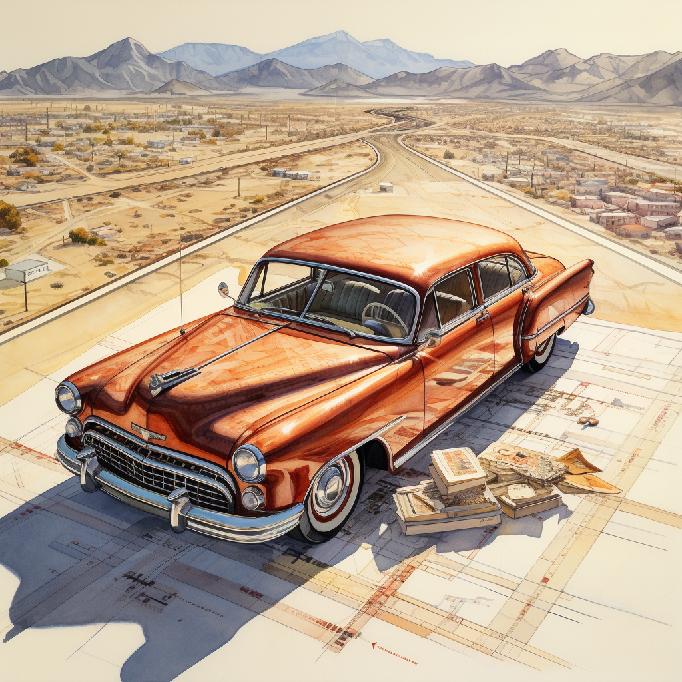

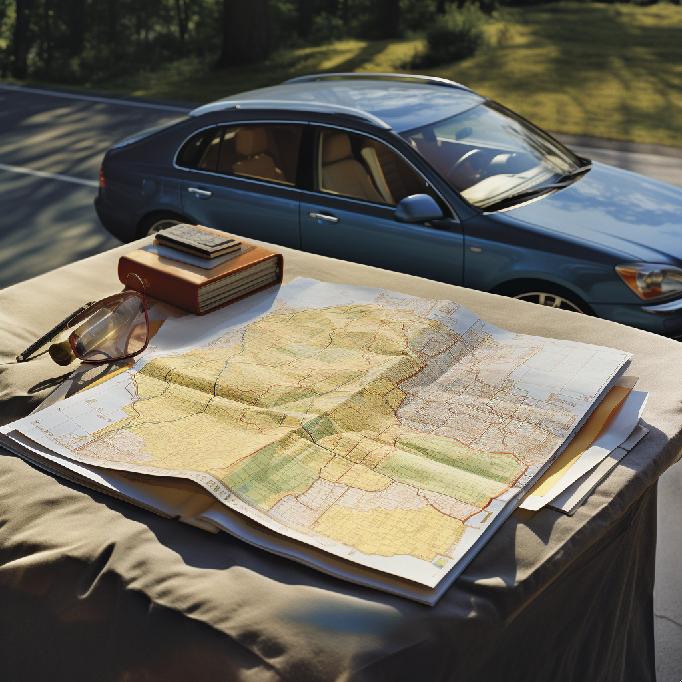

Variation on Image#2 from Prompt#1

I used MJ’s tooling to iterate with the second image in the set above. And got some acceptable results, to be frank. So it seems that while the model was hallucinating a little, its tooling to guide the image generation are certainly useful. Speaks volumes to the value of UX design around GenAI models.

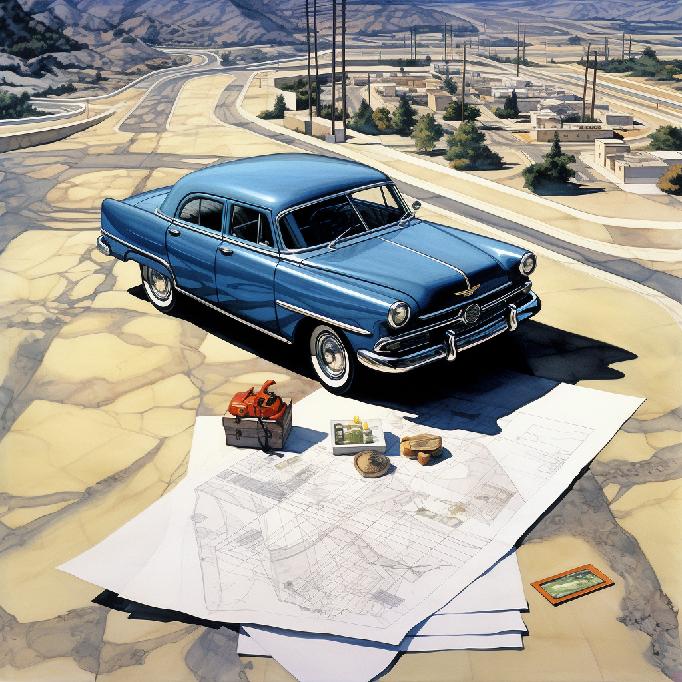

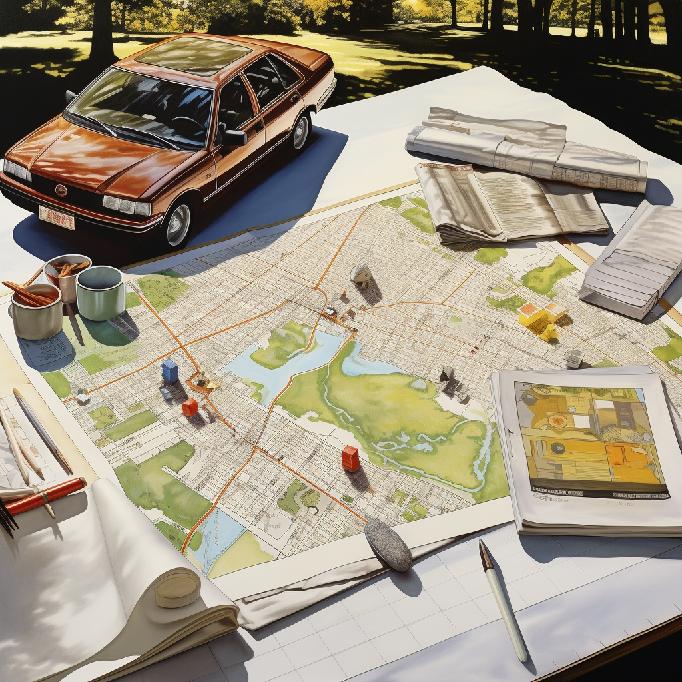

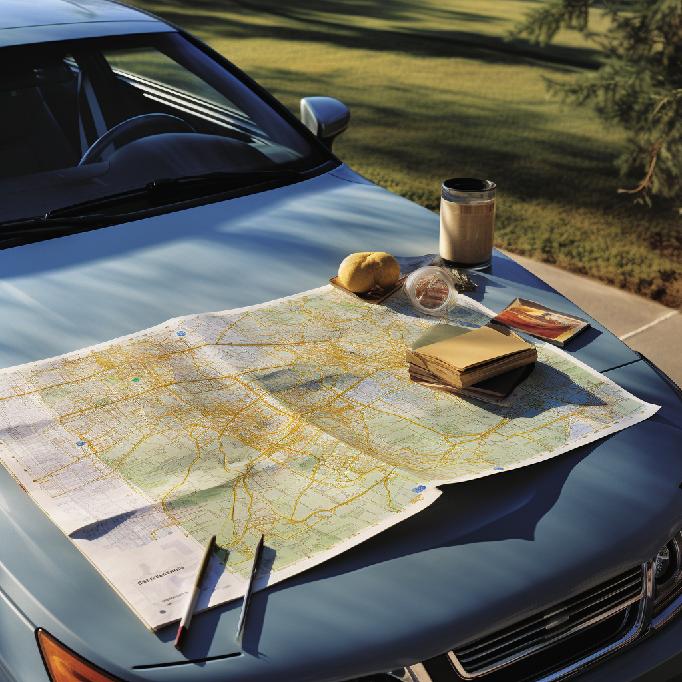

Prompt#2: “a roadmap on the hood of a car”

So, then, I simplified things somewhat. This is how I debug prompts. I wanted to see if MJ understood the basic concept of a roadmap on a car. And, more hallucinations, twice over…

These generations are very abstract, and wild! MJ went “above and beyond” on this one, a little too much. None of them are maps! But they all do show roads, I suppose.

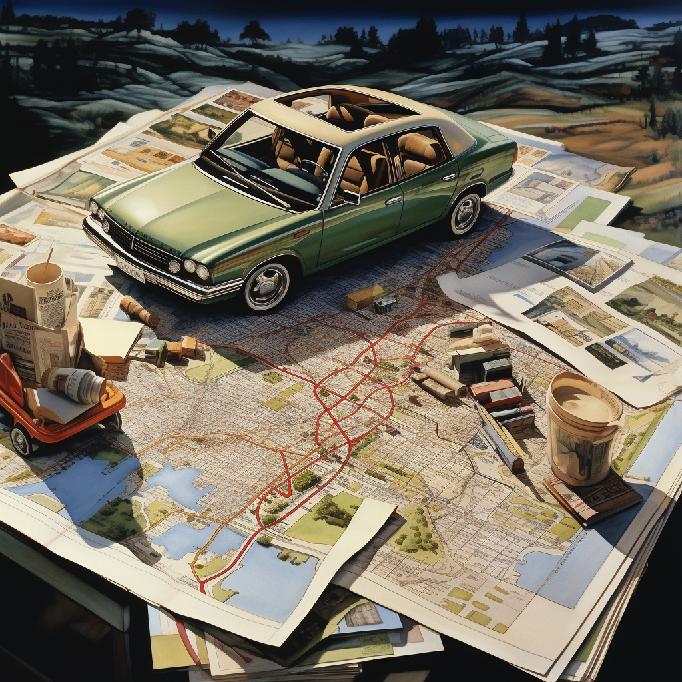

Prompt#3: “a road map on the hood of a car”

Then I figured that may be I should split the “roadmap” into two words: “road map”. That still did not help. The cars are now in the map itself.

Prompt#4: “a road map”

Desperation was not kicking in. And I just wanted to see if MJ even got what a “road map” even was. It did not. We are now squarely in generating cartoon/comic illustrations of roadways, with cars in them. I do think these would pass off as road maps in a comic book … so that is something, I suppose.

Prompt#5: “a roadmap”

Ok then, what about a “roadmap” one word. We are now seeing images of roadways, but no roadmap.

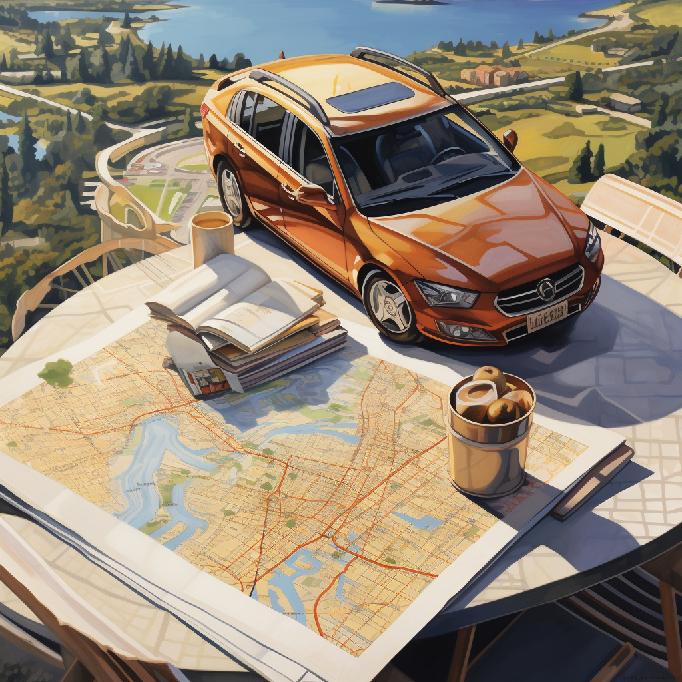

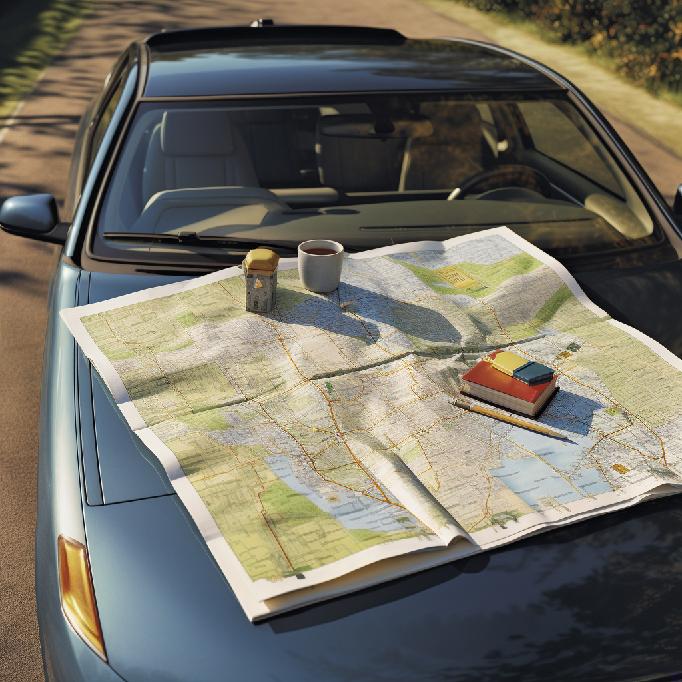

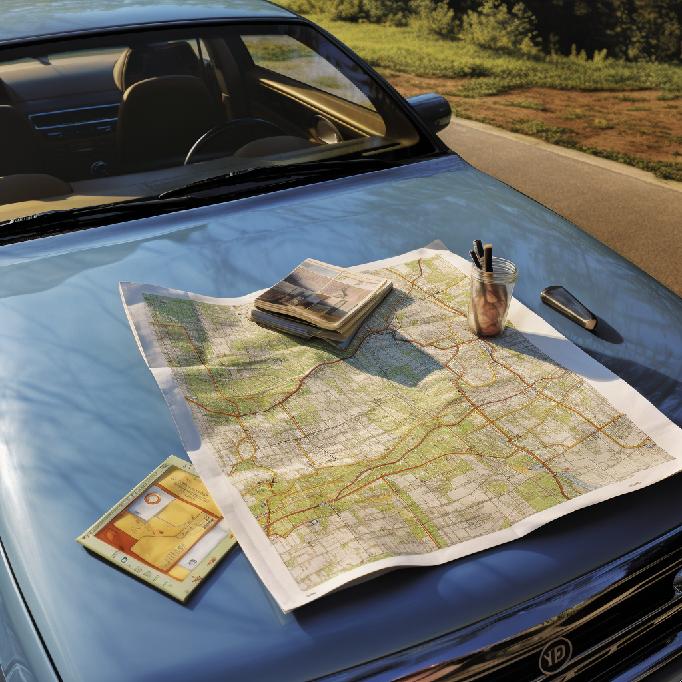

Bing: “Create an image of a road map, spread out on the hood of a car; the car is parked on the side of a highway, on a clear, bright sunny day.”

Bing got it. They are pretty vanilla, but very, very functional!

Endnote

MJ’s bias towards creativity and imagination does get it into trouble. And this is where results from Bing/DALL-E3 shine so well — they are practical, functional … I can see myself using these images in a marketing campaign or a presentation, or for ads on Google/Facebook. I would likely not use MJ’s results all that much.

That tells me that DALL-E3 has a scalable business model. Which brings me to a useful question for Midjourney: what is the business endgame here with imaginative looking images?

— vijay, thinking about debugging a lot on a Sunday evening.

Leave a comment